— “Should I spend the time and effort on integrating Microformats into my site’s pages?”

Just during the past couple of weeks, the question has arisen yet again, and along with it there was an additional development which further emphasizes why it’s a good thing and why webmasters should be incorporating the protocol sooner than later. More on this in a minute.

I believe I was likely the first to ever propose using hCard Microformats as a component of local search engine optimization, back in 2006 (see: Tips for Local Search Engine Optimization). Back then, I had seen how Microformats were begining to take off, and I saw indications of converging trends: the sharp interest from the major search engines in local search and yellow-pages-like functionality; the increasing uses for types of open formats and extensible semantic tagging; and, most telling of all, the involvement of a number of key technologists from within Yahoo! in the Microformats movement.

I knew that as search engines attempt to match up websites which they crawl with more formal, local business listing data, they would encounter some difficulties in using algorithms to interpret the data properly. Questions such as: What is the street address of this business webpage? What is the Business Name vs. other text on the page? What is the Street Name vs. the City Name? Other questions arise as well, since website designers mostly design towards their human audience rather than algorithms attempting to interpret meaning from raw data. For instance, what Business Category should this local business website be associated with?

Like other forms of semantic markup, Microformatting labels webpage content behind the scenes, specifically telling what each piece of data is while still displaying the webpage normally for human users. If webpages of local businesses were to incorporate hCard Microformatting, I reasoned, then search engines would have an easier time of associating the sites with map locations and business directory listings. Further, if a site contained such markup, the search engine could have a higher degree of confidence in accurately normalizing their data and matching up with user queries, so such pages could potentially rank better in the future.

However, when I introduced the idea, I was not aware of any search engine that was specifically seeking out this type of semantic data. While some Yahoo! personnel were throwing support behind the movement, there was no clear indication that their search engine would seek out specially labeled data fields nor treat them any differently.

Still, there were additional reasons for using the Microformats: they provided additional functionality for some devices and for users who installed special applications to read such content out of pages in order to easily make use of it. A great example would be the Operator Toolbar for Firefox which could allow a user to easily copy out the contact details from a webpage and save it into an address book, quite similar to how vCard electronic business card info can be transfered and harvested easily from email notes (vCard is supported by such mainstream applications as Microsoft Outlook).

The Yahoo! Local search team obviously believed that people could find Microformatting potentially useful, because they incorporated it into their Local Search results earlier in 2006.

Further supporting my prediction that this was an important and growing protocol, Google subsequently immitated Yahoo by incorporating hCard Microformats in Google Map search results in 2007.

Meanwhile, at conferences and via email, many individuals asked me whether Google Maps was “reading” Microformats from webpages. I’d spoken with a few Google engineers during this period, and they answered pretty uniformly: if sufficient numbers of sites made use of this, they’d almost certainly incorporate it as yet another signal in local search data. I knew that there really wasn’t “sufficient numbers” of sites incorporating it yet, but I continued to see indications that the protocol was growing as a trend, and a number of other optimization experts also threw weight behind supporting it as a component of good, local site design. So, I’d still have to truthfully answer, “no, it’s not any sort of factor that will directly make your pages rank any higher, BUT, you should make use of it anyway!” In most of the cases of local info pages I analyze on the web, it seemed like integrating the Microformats should be relatively low-impact in terms of development effort required.

Now fast-forward to the present in 2009, and the question of whether to use Microformats is still getting posed to search marketing experts. On May 4th, someone asked well-known SEO, Michael Gray whether hCard and other Microformats matter for SEO. I think Michael gave a pretty well-reasoned answer overall, although I believe Microformat protocols are just about excruciatingly simpler than he represents, and I think there’s some good reasons to not be quite as conservative about using them as he suggests.

First of all, I believe the main advantages to using Microformats are:

- They can help search engines identify Business Name, Address, Phone, and Categories on webpages. Variations in formatting on various sites can contribute to misassociation of data elements. Imagine “Houston’s Restaurant on Dallas Street in Paris, Texas”. If an algorithm is attempting to interpret this in order to index the business/site, how does it know for certain what element is name vs. street address vs. city?

- They can help search engines in associating the website with their listings within the engine’s directory listings content

— a vital step in “canonicalizing” business information. Google gets business listings from data aggregators and business directory partners, and they have to associate all the various sources of data for a particular business location with a single business listing. This is not a simple activity! Differences in ways a business name is spelled, different ways addresses are written, and different phone numbers all can result in businesses’ listings getting duplicated and diluted in ranking ability within Google Maps. So, having Microformats on your business webpage could help it get properly associated with directory listings already within Google.

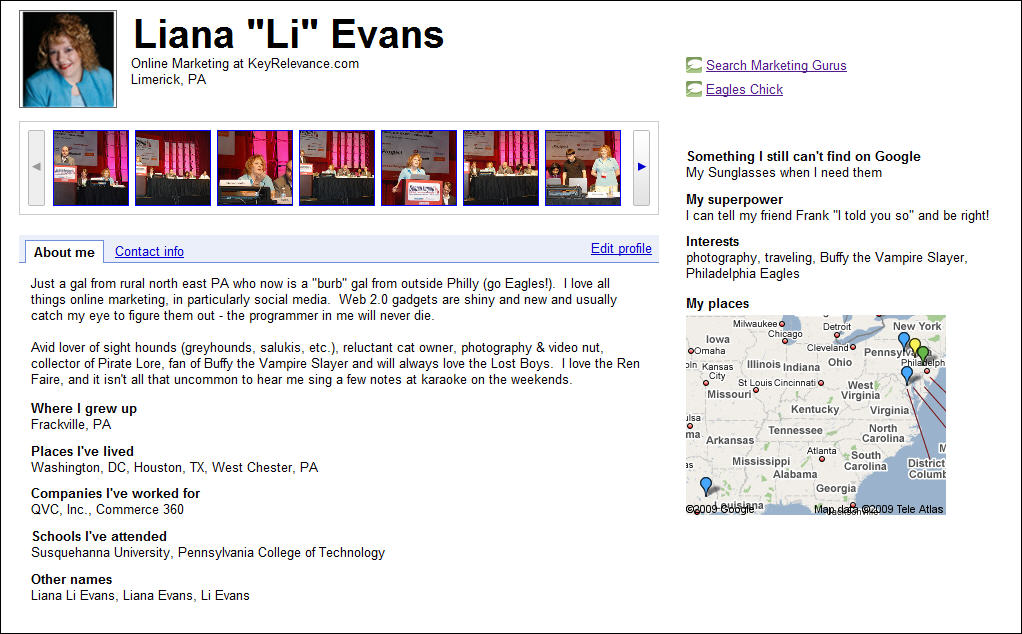

- Microformats facilitate the ease by which users can copy off a business’s contact information to store in their address books and elsewhere.

- Microformats could also help open up content for use by other developers in unforseen and advantageous ways. For instance, by including the longitude and latitude of your business address in your pages, others can easily port the precise location over to the mapping app of their choice

— if left up to just using the street address, mapping systems can frequently make significant errors.

- It’s just not all that hard to add them to sites which display addresses of local places. Some very simple development and coding which could be done within just an hour or two are all that’s required for most sites.

Google actually does a pretty good job of “canonicalizing” classic business listing data from local biz websites, so if my theories on why it could be beneficial for SEO in the future are correct, there are a lot of sites where it likely wouldn’t have all that much impact upon performance even if/after Google begins recognizing it as a local site search signal. It could help them collapse dupe listings down to a single one, which could boost that listing’s ranking weight. But, for businesses with already easy-to-interpret addresses or where Google hasn’t had difficulty in grouping related listings together, it likely wouldn’t have any ranking effect whatsoever.

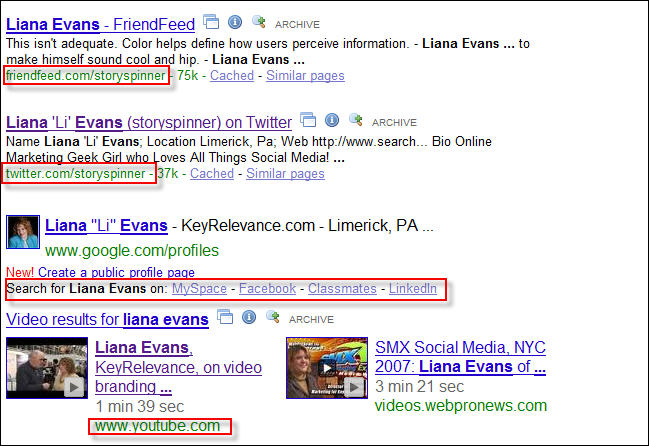

As of just last week, there’s an even more compelling reason to incorporate Microformats, though: Google is following close upon the heals of Yahoo again and has announced that they’re introducing “Rich Snippets” in Google search results pages — essentially the Rich Snippets are more enhanced search result listings, allowing the display of star ratings and the numbers of reviews for content on the pages. Similar to Yahoo’s SearchMonkey which allowed some customization of search listings, Google is allowing this special content display initially for pages which incorporate hReviews Microformat.

Many of us theorized that Yahoo’s SearchMonkey could be potentially advantageous to sites, since search result listings which look different can stand out from the crowd, attract more users’ notice, and therefor have a greater chance of being clicked upon. Indeed, subsequent research showed that SearchMonkey’s special listing treatment could increase CTR by 15%!

There’s every reason to believe that display enhancements likely could improve CTR within Google search results as well, so there are great incentives to adopt the hReview protocol for those sites which have reviews and ratings content. This is only the first stage of Google’s work in Rich Snippets, however, and it’s pretty certain that Google will introduce more types of structured data into special display within search result listings. hCard and hCalendar content are some top candidates poised for imminent introduction when Google expands this.

We’re now seeing adoption of hCard in even some high-popularity sites such as Twitter now, so it may be time to actually declare Microformats to be “mainstream”!

So you see, there are compelling reasons to use Microformatting in the here-and-now, rather than putting it off. It’s generally not difficult to implement, it enhances site functionality for good user-experience, it generally won’t interfere with existing graphic layout, it could eventually help in rankings, and it might soon help in terms of click-through rates or overall conversions.

Technorati Profile