By Bill Slawski

A newly granted patent from Yahoo describes how information collected from usage log files from toolbars, ISPs, and web servers can be used to rank web pages, discover new pages, move a page into a higher tier in a multi-tier search engine, increase the weight of links and the relevance of anchor text for pages based upon those weights, and determine when the last time a page has been changed or updated.

When you perform a search at a search engine, and enter a query term to search with, there are a number of steps that a search engine will take before displaying a set of results to you.

One of them is to sort the results to be shown to you in an order based upon a combination of relevance and importance, or popularity.

Over the past few years, that “popularity” may have been determined by a search engine in a few different ways. One might be based upon whether or not a page is frequently selected from search results in response to a particular query.

Another might be based upon a count by a search engine crawling program of the number of links that point to a page, so that the more incoming links to a page, the more popular the page might be considered. Incoming links might even be treated differently, so that a link from a more popular page may count more than a link from a less popular page.

Problems with Click and Link Popularity

Those measures of the popularity of a page, based upon clicks in search results and links pointing to that page, are somewhat limited. It’s still possible for a page to be very popular and still be assigned a low popularity weight from a search engine.

Example

A web page is created, and doesn’t have many links pointing to it from other sites. People find the site interesting, and send emails to people they know about the site. The site gets a lot of visitors, but few links. It becomes popular, but the search engines don’t know that, based upon a low number of links to the site, and little or no clicks in search results to the page. A search engine may continue to consider the page to be one of little popularity.

Using Network Traffic Logs to Enhance Popularity Weights

Instead of just looking at those links and clicks, what if a search engine started paying attention to actual traffic to pages, measured by looking at traffic information from web browser plugins, web server logs, traffic server logs, and log files from other sources such as Internet Service Providers (ISPs)?

A good question, and it’s possible that at least one search engine has been using such information for a few years.

Yahoo was granted a patent today, originally filed in 2002, that describes how search traffic information could be used to create popularity weights for pages, and rerank search results based upon actual traffic to those pages, and be used in a number of other ways.

Here are some of them:

- The rank of a URL in search results might be influenced by the number of times the URL shows up in network traffic logs as a measure of popularity;

- New URLs can be discovered by a search engine when they appear in network traffic logs;

- More popular URLs can be placed into higher level tiers of a search index, based upon the number of times the URL appears in the network traffic logs;

- Weights can be assigned to links, where the link weights are used to determine popularity and the indexing of pages, based upon the number of times a URL is present in network traffic logs; and,

- Whether a page has been modified since the last time a search engine index was updated can be determined by looking at the traffic logs for a last modified date or an HTTP expiration date.

The patent granted to Yahoo is:

Using network traffic logs for search enhancement

Invented by Arkady Borkovsky, Douglas M. Cook, Jean-Marc Langlois, Tomi Poutanen, and Hongyuan Zha

Assigned to Yahoo

US Patent 7,398,271

Granted July 8, 2008

Filed April 16, 2002

Abstract

A method and apparatus for using network traffic logs for search enhancement is disclosed. According to one embodiment, network usage is tracked by generating log files. These log files among other things indicate the frequency web pages are referenced and modified. These log files or information from these log files can then be used to improve document ranking, improve web crawling, determine tiers in a multi-tiered index, determine where to insert a document in a multi-tiered index, determine link weights, and update a search engine index.

Network Usage Logs Improve Ranking Accuracy

The information contained in network usage logs can indicate how a network is actually being used, with popular web pages shown as being viewed more frequently than other web pages.

This popularity count could be used by itself to rank a page, or it could be combined with an older measure that uses such things as links pointing to the page, and clicks in search results.

Instead of looking at all traffic information for a page, visits over a fixed period of time may be counted, or new page views may be considered to be worth more than old page views.

Better Web Crawling

Usually a search engine crawling program discovers new pages to index by finding links to pages on the pages that they crawl. The crawling program may not easily find sites that don’t have many links pointing to them.

But, pages that show up in log files from ISPs or toolbars could be added to the queue of pages to be crawled by a search engine spider

Pages that don’t have many links to them, but show up frequently in log information may even be promoted for faster processing by a search crawler.

Multi-Tiered Search Indexes

It’s not unusual for a search engine to have more than one tier of indexes, with a relatively small first-tier index which includes the most popular documents. Lower tiers get relatively larger, and have relatively less popular documents included within them.

A search query would normally be run against the top level tier first, and if not enough results for a query are found in the first tier, the search engine might run the query against the next level of tiers of the index.

Network usage logs could be used to determine which tier of a multi-tier index should hold a particular page. For instance, a page in the second-tier index could be moved up to the first-tier index if its URL shows up with a high frequency in usage logs. More factors than frequency of a URL in a usage log could be used to determine which tier to assign a document.

Usage Logs for Link Weights

One use search engines have for link information is to determine the popularity of a document,

The number of incoming links to a page may be used to determine the popularity of that page.

A weight may also be assigned based upon the relationship between words used in a link and the documents being linked to with that link. If there is a strong logical tie between a page and a word, then the relationship between the word and the page is given a relatively higher weight than if there wasn’t. This is known as a “correlation weight.” The word “zebra” used in the anchor text of a link would have a high correlation weight if the article it points to is about zebras. If the article is about automobiles, it would have a much lower correlation weight.

Links could aso be assigned weights (“link weights”) based on looking at usage logs to see which links were selected to request a page. As the patent’s authors tell us:

Thus, those links that are frequently selected may be given a higher link weight than those links that are less frequently selected even when the links are to the same document.

In other words, pages pointed to by frequently followed links could be assigned higher popularity values than pages with more incoming links that are rarely followed.

Link weights Used to Determine the Relevance of Pages for Anchor Text

If a word pointing to a page is in a link (as anchor text), and the link is one that is frequently followed, then the relevance of that page for the word in the anchor text may be increased in the search engine’s index.

For example, assume that a link to a document has the word “zebra”, and another link to the same document has the word “engine”. If the “zebra” link is rarely followed, then the fact that “zebra” is in a link to the document should not significantly increase the correlation weight between the word and the document. On the other hand, if the “engine” link is frequently followed, the fact that the word “engine” is in a frequently followed link to the document may be used to significantly increase the correlation weight between the word “engine” and the document.

Conclusion

This patent was originally filed back in 2002, and some of the processes it covers are also discussed in more recent patent filings and papers from the search engines, such as popularity information being used to determine which tier a page might be on in a multi-tier search engines.

Some of the processes it describes have been assumed by many to be processes that a search engine uses, such as discovering new pages from information gathered by search engine toolbars.

A few of the processes described haven’t been discussed much, if at all, such as the weight of a link (and the relevance of anchor text in that link) being increased if it is a frequently used link, and decreased if it isn’t used often.

It’s possible that some of the processes described in this patent haven’t been used by a search engine, but it does appear that search engines are paying more and more attention to user information that they do collect from places like toolbars and log files from different sources. This patent is one of the earliest from a major search engine that describes how such user data could be used in a fair amount of detail.

Another patent from Yahoo was also granted this week on How Anchor Text can be used to determine the relevancy of a page for specific words. I’ve written about that over on SEO by the Sea, in Yahoo Patents Anchor Text Relevance in Search Indexing

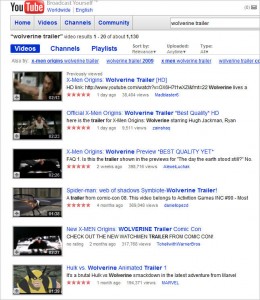

With the rise of Twitter and it’s limit of 140 characters (250 if you turn off javascript), when it comes to maximizing space to get your message across, every character counts. With that fact in mind URL shorteners are cropping up all over the place. There are some great URL Shortening services, Tweetburner, Bit.Ly, TinyURL and Cli.gs are some great services and actually will track your click throughs.

With the rise of Twitter and it’s limit of 140 characters (250 if you turn off javascript), when it comes to maximizing space to get your message across, every character counts. With that fact in mind URL shorteners are cropping up all over the place. There are some great URL Shortening services, Tweetburner, Bit.Ly, TinyURL and Cli.gs are some great services and actually will track your click throughs. Then lets look at the whole “oh I found this I want to blog about it” piece of the marketing and social media puzzle. Someone who finds some great content via one of these framing URL shortening services and isn’t quite tech savvy, pulls the shortened URL from the address bar. Guess what, your site doesn’t get the credit for that link, the shorten URL does. Again, this is basically like hijacking your content.

Then lets look at the whole “oh I found this I want to blog about it” piece of the marketing and social media puzzle. Someone who finds some great content via one of these framing URL shortening services and isn’t quite tech savvy, pulls the shortened URL from the address bar. Guess what, your site doesn’t get the credit for that link, the shorten URL does. Again, this is basically like hijacking your content.