You may be aware that Bing recently released a new version of their Webmaster Tools which are intended to help webmasters in improving their sites’ performance in Bing search. One of Microsoft’s Senior Program Managers and SEOs, Duane Forrester, asked a number of us to give feedback to their team on what could be improved about the interface. So, I thought it might be good to provide that feedback via blog post, openly — not to beat up on Bing, but to further bounce ideas among the community.

Giving feedback to any of the search engines about their tools for webmasters seems a bit fraught with the near-futile dichotomy between the desires of Search Engine Optimization experts and the desire of search engineers to neutrally provide positive/fair rankings of search results. However, the exercise of me providing a little feedback is worthwhile, because if the tools are useless or pointless to us, then there’s little point in the search engines going to the effort of providing them in the first place.

Having worked in a major corporation before, I almost feel repressed about throwing out suggestions that I know could be deemed no-gos from the point of view of Bing engineers. I tend to self-censor to a degree because I don’t want to be interpreted as naive of the issues the search engines must take into account in trying to limit undue influence of those attempting to subvert the SERPs.

Even so, I’m aware of the potentially conflicting considerations, and as I described earlier, it’s an exercise in futility if the tools don’t provide worthwhile functionality to the intended users.

One of the primary problems I see with Bing’s Webmaster Tools is the sense of “keeping up with the Joneses” one gets when reviewing their interfaces. Bing’s development team is in a near no-win situation with whatever they do in this area. On one hand, if they copy the same functionality found in Google’s Webmaster Tools, they’d be accused of being mere immitators. However, there are some good elements in Google’s toolset which really ought to be provided, perhaps. On the other hand, if they went even further in providing usefulness to webmasters, it could make them more prone to unethical marketing exploits. So, there likely were not a lot of easy solutions nor perhaps obvious things which they should have done.

Further, their focusing upon their tool-vs-Google’s tends to be a bit incestuous, and there’s the usual engineer myopia in providing what they think people would need/want versus trying to really look at the problem directly from the point of view of a webmaster. (Now, this bias in perception can’t be accused of Duane, because he was an external SEO prior to working for Microsoft — but there’s a definite sense of this basic utility design problem inherent in both Bing Webmaster Tools as well as Google Webmaster Tools.)

Likewise, Google Webmaster tools suffers a bit from the conflicting goals of the engineers and the needs of the tools’ target audience. So, I’d prefer that none of the search engines look at one another’s offerings when designing such things, but instead try to focus solely upon providing as much functionality as webmasters might need. As things currently stand, there’s a sensation that all of the search engines are providing something of “placebo utilities” to webmasters — the interfaces have some confusing melange of features which are ultimately not all that useful, but are instead intended to throw up some smoke and mirrors to make it appear that they’re trying to help webmasters with the optimization of their sites.

Moving past my perhaps-unfair assertions, let’s look at what the new Bing tools provide, and what could be done better.

First, a head-nod to Vanessa Fox for her comparison between Bing’s and Google’s Webmaster Tools — as the creator of Google’s Webmaster Tools, she is likely one of the best people around to examine such utilities with a critical eye, and in the best position to know how much info a search engine might realistically be able to provide, and in what format. Likewise, a nod to Barry Schwartz’s post about Bing’s tools.

Both Vanessa and Barry berate Microsoft for building the tools while requiring Silverlight technology to view/use them. I don’t consider that as much of a big deal, because I consider that a sort of “religious difference” in how the tools were constructed (most of us who are jaded about how Microsoft has strong-armed proprietary technology in the past might react negatively against Silverlight, as well as those who avoid it out of conservative privacy practices).

However, if I’m looking at Bing Webmaster Tools purely from the perspective of how well it does or doesn’t function, I’m not concerned about this tech dependency built into it, since I think the majority of webmasters out there will be unaffected by this. I’m not a fan of MS programming protocols AT ALL, and it may be a bit of my former bias as a technologist within a megacorporation creeping in, but the Silverlight criticism just appears slightly out of sync with the primary issues of whether the tools provide vital functionality or not — and, it may not be unfair of Microsoft to decide that if you wish to play in their Bing sandbox, they have the right to promote their proprietary technology to do so. In comparison, I have a friend who is a privacy freak, and he surfs with Flash disabled — Google’s Webmaster Tools requires Flash for one or two graphs, and would be equally irritating to him as Silverlight.

Both Barry and Vanessa mention how Bing’s new interface revoked the backlink reports, and I agree with them both on this point. This was one area where I’d hoped Bing would take the opportunity to be more open than Google. If the engineers looked at competitors’ tools while building Bing’s, they should have tried to recreate the backlink reports that Yahoo! provided in Site Explorer — which seems to give a more comprehensive picture of backlinks. Since webmasters are told that inbound links are one major criterion for rankings, avoiding providing this info is a major void.

Bing obscures the numbers of pages indexed when one performs a “site:” search by domain, too, so revoking this functionality, such as it was, from the old interface eroded some of the usefulness. Perhaps their pre-development surveying of webmasters resulted in feedback that their earlier backlink report “wasn’t useful”, but that would’ve mainly been because it was less-robust than one like Yahoo’s.

Vanessa mentions that they don’t provide data export features, and I agree completely that this is a major oversight. In fact, as a programmer I happen to know just how relatively easy it is to code data to export in XML or CSV, and considering how long it took to launch the product it’s sort of shocking they didn’t include this upon launch. (You’d think Microsoft would not miss an opportunity to provide a “click to export to Excel” button!)

Vanessa stated that they also ditched the Domain Score, and remarked that this was a good thing. I disagree on this point because I think any insight into a ranking score that any of the engines give us is helpful in assessing how effective/important a site or domain is. Was this the same as the small bar-scales Microsoft had been providing for a handful of the more important site pages via the interface? Although these graphical page ranking scores were entirely derivative of Google Toolbar PageRank, I would’ve prefered they provide even more in that area. Bing’s in a position where they ought to be able to experiment with providing more info than Google does, and see just how dangerous it really is to be more open with marketers!

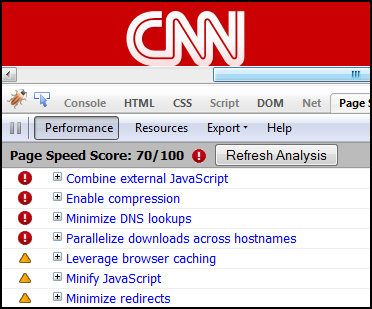

Vanessa did a great comparison between the analytics Bing provides versus Google Webmaster Tools and Google Analytics. While analytics from Bing’s perspective are interesting to us all, she notes one aspect that also strikes me as an issue with the graphs: as a webmaster/SEO, when I see indexation decreasing, I’d really like to know why. This is particularly irritating where Bing is concerned, because among the industry I think it’s widely felt that Bing simply indexes a lot less than Google.

Many of my clients want to know what they can do in order to increase their indexation with Bing. I see the same thing with my test websites. I may have 30,000 discrete pages and Bing appears to index a sharply lower number than Yahoo or Google. The feature allowing one to manually submit URLs seems to acknowledge this sad fact — but, in context, it’s nearly sending the wrong message! “Oh, our spider’s legs get tired out there, so bring your pages directly to us.” Vanessa’s got a point on this score — why should I feel I need to do this if you accept Sitemaps? And, if I or my clients have tens of thousands of site pages, fifty pages to be manually submitted at a time is simply not a sustainable solution. I can understand having the interface to rapidly submit brand new content pages, but what’s missing may be some clear communication as to what issue is restricting my indexation.

The Features showing whether there are robots restrictions, malware, or crawl errors which could impact a site are all great. However, if one already has everything functioning just fine, the tools need to answer futher questions: Why isn’t my site crawled more deeply? And: Why don’t my pages rank higher? Ultimately, webmasters ask: What can I do to improve my site’s performance? Understandably, Bing and other search engines are reticent to provide too much info in this area. However, there are things which they could provide:

- Possibly a tool where a webmaster could select one of their pages or submit a URL to find out what primary keyword phrase Bing considers the page to be about?

- Tools which report upon quality issues detected with specific pages. For instance, is the page missing a Meta Description or Title? Are there pages where the Title or Meta Description appears to not be relevant to the page’s content, or out of sync with anchor text used in inbound links? Are there images which could be improved with ALT text?

- Why not merely inform webmasters that you consider their links and other references to be too few or of too low in importance?

- Bring back the scales showing page scores, and actually go further in providing some sort of numeric values!

- Actually show us that you spider all pages, even if you opt not to keep all in your active index! This would at least give the impression that you are able to index as deeply as Google, but choose not to display everything for other reasons.

- How about informing us of pages or types of pages (based upon querystring parameters, perhaps) which appear to have large degrees of duplication going on?

- Tie-in our Webmaster Tools with local listing account management for local businesses, so everything could be done via one interface.

- Provide means for us to customize the appearance of our SERP listings a little bit, similar to Yahoo SearchMonkey and Google’s Rich Snippets.

- Provide us with tools that help us improve overall site quality, such as if you see pages on our site with incorrect copyright, misspellings, or orphaned pages.

- Consider providing us with an A/B testing type of tool so that you might inform us about which of two page layouts performs better for Bing search!

- Inform us if you detect that a site has some sort of inferior link hierarchy — this could indicate usability problems affecting humans as well as spiders.

- Provide more granular details on how well we perform in Bing Image Search, Mobile Search, Video Search, etc. Currently, I cannot tell how many of my images are indexed in Bing.

- For that matter, it would be nice to enable an Image Sitemap file, like what Google offers.

- Finally, for a really pie-in-the-sky request: You operate web search for Facebook — would your contract allow you to tell us how often our webpages appear in Facebook search results and how many clickthroughs we might get from those?

Anyway, there’s my feedback, criticism, and some ideas for additional features. I’m not trying to beat anyone up and I’m actually grateful for any feedback we receive from all of the search engines on our performance within them. I mainly ask that the search engineers will keep in mind that we mainly want to know “What can we do to improve performance?” and to provide us with tools to accomplish that in as much as they’re able to do without compromising the integrity of the engine.

Where Bing is concerned, I believe it could be possible to be even more open than Google is in order to further differentiate yourselves and experiment to see what’s really possible in terms of openness!