Google rolled out Instant Previews three months ago in November. After I looked over the new utility, it struck me as very odd that I found pages in Google search results that had no cached view of the indexed content, while they did have this new viewing option of a screengrab of the page.

For instance, quite a number of newspaper websites choose to disable the cached views of their pages. Just search for “newspaper”, and you can immediately see an example such as the New York Times:

As you can see, the listing in the Google search results for The New York Times has no link under it for “Cached”. However, it does have a magnifying glass — the Instant Previews button — which, when clicked, reveals a screengrab of the NYT homepage from Google’s copy of the page when they last spidered it.

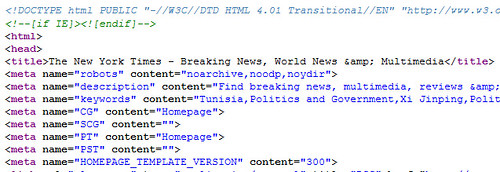

The reason the New York Times doesn’t have a “Cached” link is that they purposefully set up a robots meta-tag that specified that they didn’t want Google and other search engines to make a cached copy available for users to see or access:

The NYT “robots” meta has “noarchive” as one of its parameters. This parameter tells Google not to store a cached copy of the page. Or, more accurately, as Google’s Webmaster Guidelines state, “noarchive” instructs Google to not provide the link to the cached copy (Google has to cache some encoded version of the page if they spider it to offer up in the SERPs).

Newspapers with paywalls have done this in many cases where they desire to be spidered and indexed by Google, but they may allow each user “one click free” where the user is allowed to freely access and read one article from their site, but any subsequent articles may require the user to register, or pay. So, those news publishers do not want users to be able to read the article for free via a cached copy.

Various other types of sites use the “noarchive” when they have changing copy on the page that might get out of sync between Google and the page, or when there’s some sensitive content that shouldn’t be visible upon other sites.

So, when Google launched the Instant Preview, it would be common sense for those publishers who’ve chosen to suppress their cached view to think that it would also be suppressed via the Instant Preview. Frankly, the Instant Preview images are a representation of the webpages. They are a snapshot. They are a type of cached view of the page.

Oh, sure, the Instant Preview is often too small to reasonably read print upon the page. However, that’s not the point. And, the print isn’t the only reason why some have opted to disable cached views — for some, the images are also sensitive. And, the print’s not always too small to be read.

Now, Google has provided a way to suppress the Instant Previews. According to the FAQ, using the “nosnippet” parameter in robots meta tag suppresses Instant Previews.

But, the point is that “noarchive” should’ve been expected to have applied to the Instant Previews. Despite the preview images being chopped in some cases or being difficult to read, they are a type of cached copy of the page. And, there are people who don’t mind the text snippets showing up in the search listings, who do mind having the preview page screen-grabs appearing.

Publishers who were using robots noarchive protocol shouldn’t now have to go back and add nosnippet parameters.

Google’s deployment of the Instant Previews, while ignoring the noarchive specification in favor of a lesser-used control parameter is something of a betrayal of trust for webmasters.